Now is the time for a new article ! After studying Microsoft, we’re going to introduce an important concurrent company : Apple inc. (formerly Apple Computer inc.). Since everything can’t be done at once, this article will focus specifically on Apple desktop computers, and more specifically about Apple OSs, even though a bit of focus on hardware is mandatory in order to get a good picture of the whole thing.

THE APPLE I

Like many stories in the world of computing, this story begins with students in a garage around 1975. In fact, history shows that most important innovations in the world of computing come from amateurs and research projects, which can be easily explained by the fact that a focus on costs and benefits like most companies have isn’t a rich ground for taking risks, while trying to introduce innovation is always a risky task.

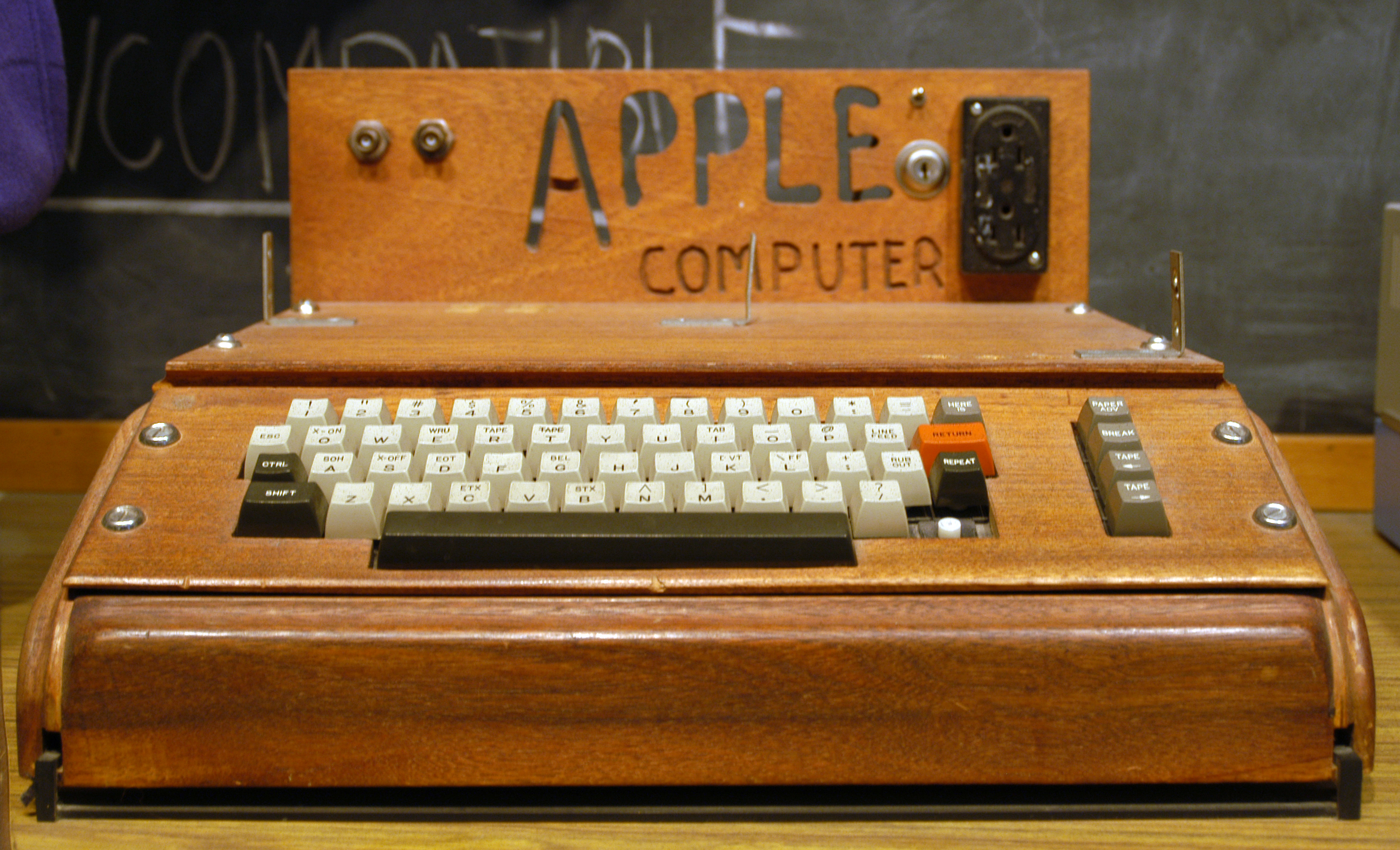

Here we had, again, two students : Steve Jobs and Steve Wozniak. The first one being an idealist guy with some persuasion talents, the second being a skilled computer engineer. Their idea was simple : using computers should not be overkill and reserved to sole geeks like using the switches and blinking lights of the Altair 8800. They both felt that, provided pricing was not excessive, there was a market for a more user-friendly computer. The incarnation of this idea took the form of the Apple I, one of the first computers designed for bundling with a keyboard and a monitor, long before the IBM PC, at the cost of 666.66$, and especially tiny for its times. Given the high cost restrictions, the Apple I wasn’t strictly a fully-functional computer : when one bought an Apple I, one got some circuitry boards including the motherboard, some RAM, a CPU, systems handling video and keyboard, and that’s all : power supply, boxing case, keyboard, and monitors had to be bought or made separately (although a usual TV set would do well in the latter role). The product was first introduced in their computer club.

Having received some criticism about the lack of mass-storage device (meaning that every user program had to be re-typed on the keyboard each time the computer would boot up), Jobs and Wozniak introduced later an inexpensive yet interesting device introducing the ability to record data to – and read data from audio cassettes. However, poor quality of tapes and difficulty to achieve good synchronization between the computer and the tape reader made this solution extremely unreliable. Around the same time, their main vendor introduced a wood case in order to make the computer look somewhat more finished. The Apple I became more widespread, with Jobs and Wozniak selling around 200 Apple I, which permitted them to create a company, Apple, and envision a more mature and ambitious project : the Apple II.

The Apple I in its pretty wood case

THE APPLE ][

The Apple II introduced in 1977 much more powerful hardware, including color graphics capabilities. On the software side, it introduced a BASIC interpreter, allowing one to program it more simply. All needed components were now bundled with the computer, which itself was enclosed in a plastic case, an idea from Steve Jobs, which also tried to prevent Wozniak from introducing many extension ports, which would later prove to be an excellent idea. Wozniak also introduced later a floppy disk-drive, made at extremely low code through lots of hacks and anti-rigorous engineering practices, of which Wozniak had some mastery. The Apple II was extremely successful.

The first Apple II, with two diskettes readers

Thanks to the extensible vision of Wozniak, lots of peripherals were added to the Apple II, allowing it to make sound, run software from competitors, and get several new processors in order to run faster. Apple themselves introduced several new releases of the Apple II (II+, IIe), adding various refinements like better management of floating-point arithmetics, ability to display lower-case characters and support all European languages.

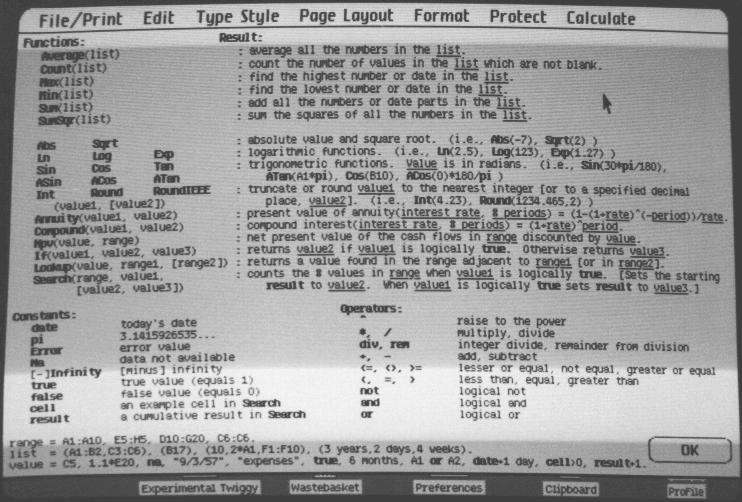

Whereas the Apple II was well-sold as a home computer, especially in the education world, it made its way to the business market, too, with the introduction of two powerful tools : VisiCalc (one of the first spreadsheets) and AppleWriter (a word processor introducing some very powerful features, like the use of macros for repetitive tasks).

THE APPLE ///

While the Apple II generated important profits, Apple started to realize that it needed new products, addressing the hardware and design limitations of the Apple II. Three project emerged : the Apple Lisa (for business market), the Apple Macintosh (for consumer market), and the Apple Sara (only made in order to have something to release while those two ones are being worked on).

Logically, the Apple Sara was the first released, in 1980. It addressed some limitations of the Apple II, like the need to plug new devices on specific sockets depending on their role (like screen on socket 1, sound card on socket 8…), introduced several refinement in mass storage like more advanced file system management, and added a simpler way to simply manage system settings and applications (though Apple almost dropped that feature). Steve Jobs introduced the idea of making the computer completely silent, forbidding the use of fans and air vents. The Sara, sold under the Apple III name and a 4340$ price tag with great fanfare in spite of its huge lack of interesting feature, came with many problems : significant overheat leading to mechanical destruction of components, the shipped clock circuit dying withing a few hours, and Apple II applications not working properly contrary to what Apple’s ad said. Steve Wozniak later stated that this was because the system’s design was no longer made by the engineering division, but by the marketing division. After Apple fixed these issues, later releases of the Apple III took back the name of Apple II in order to avoid its bad reputation.

The infamous Apple III

Two years later, IBM introduced its IBM PC, bundled with Microsoft DOS. It would eventually outperform most Apple computers in terms of sales, partly because of its good pricing/capabilities ratio, partly because of its relatively low price tag, and partly because of IBM’s extreme influence.

THE LISA

Then, as the press stated that great things happened at Xerox PARC, someone told Jobs to take a look. Greatly impressed by the GUI’s potential, Jobs convinced people at Apple to introduce such graphical interfaces. This first occurred on the Lisa project, which he directed, at least at the beginning.

The Lisa had one stated goal : increase productivity at work by making computers easier to use. This clearly was a place to introduce a GUI. The Lisa introduced powerful hardware for its time, including a hard drive disk AND two floppy disk drives. On the monochromatic screen of the Lisa, everything was graphical, including the menu used at the beginning to choose which mass storage device to boot from (and, in fact, the entire BIOS). The Lisa introduced a choice to use third-party GUIs that was later removed, and preemptive multitasking ahead of its time (even the relatively powerful hardware of the Lisa wasn’t ready for that). It introduced a desktop with file browsing abilities that will look somewhat familiar to the Windows 95 users (this is normal, since Windows stole a lot of ideas from it).

The Apple Lisa Desktop and file browsing capabilities

However, the Lisa had its own way of doing some things. As an example, menus were not attached to the applications’s windows, but rather on a single menu bar on top of the screen. Allowing to save precious space on the small screens of early Apple computers, this way of doing things was kept up to modern Apple operating systems, even though it often introduces confusion from new users by failing to consider Gestalt laws (people perceive distinct things as having something in common only if they are close to each other) and making them think the menu bar wants to turn them crazy by changing any time they click on a window. Another difference was the document-oriented (as opposed to application-oriented) interface : one has several links to create documents, but no direct access to the application creating and using them. This was a bad idea, too, since some application (like a calculator or a game) do not generate documents, leading to the need of adding links to applications nonetheless, and hence interface inconsistency.

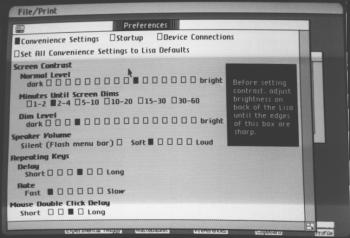

The Lisa introduced a graphical way to manage system settings, too. A point which its successors did not copy, however, is the use of radio buttons instead of sliders, for ergonomics and aesthetics reason that should be obvious but weren’t to the Lisa team.

Preferences settings, the Lisa way.

In terms of bundled applications, the Lisa was very interesting and feature-rich :

- A calculator : Computers went back to their origins with this. For some reason, Windows Calculator, released some time later, introduced pixel-perfect similarity with this application in its basic operation, except using colors.

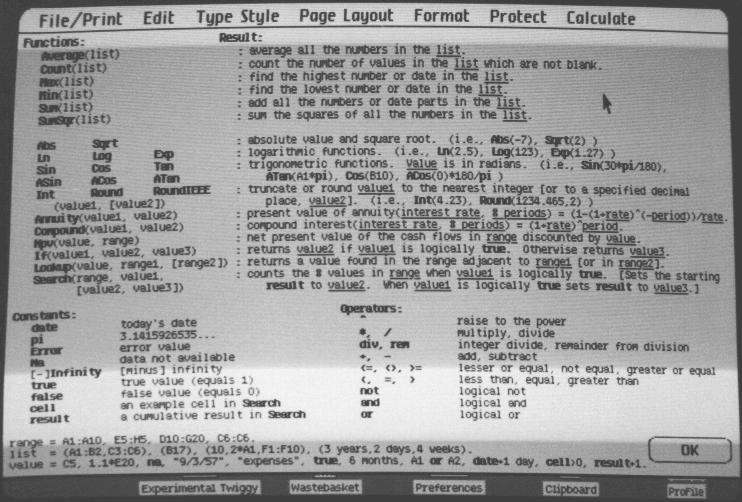

- LisaCalc : Nothing new or innovative in this one, really. Just the same as a console spreadsheet like VisiCalc, but in a window.

- LisaDraw : LisaDraw introduced some drawing capabilities, something between what Microsoft Paint is now and modern drawing programs like Paint.net or Corel Draw, including line patterns and page layout options.

- LisaGraph : It draws graphes. It does well and is very simple to use. It does not include useless features, although it lacks a useful one (equation facilities). Programs the way they should ever be.

- LisaList : A program about writing databases with nothing in particular.

- LisaWrite : A simple word processor in the wordpad style that was commonly criticized for its lack of features, especially facing Apple’s own AppleWriter.

- LisaProject : A strangely unintuitive tool for managing projects including the ability to draw graphs and get a timeline view.

- A text-mode help : Yes, you heard it well. This introduces the lack of interest from system designers at making help files that don’t suck, a tendency which was here to last.

The Apple Lisa’s infamous help system.

Released in 1983, the Lisa didn’t ever became widely popular because of its insane 10.000$ price tag and lack of third-party software. But it was an interesting product nonetheless, and one might argue that this failure gave a lesson to Apple about selling overpriced computers.

An Apple Lisa and an old HDD

THE MACINTOSH

Started around the same time as the Sara and Lisa projects, the Macintosh project was originally led by Jef Raskin, someone who envisioned the concept of computing available for the masses and felt like the text-only interface of the Apple II was not the way to go. However, its vision of the “Computers by the Millions” was closer to a PDA than to modern desktop computers. It was somewhat small, including a 9-inch monitor and a floppy disk drive for user applications. The bundled application were accessed through the use of clearly-labelled function keys, along with auto-detection of the user input’s nature (typing text would launch a word processor, typing digits would launch a calculator…). Raskin also had several contacts at Xerox and it was him that introduced Steve Jobs to the PARC and made lots of work on the Lisa’s and the Macintosh’s graphical interfaces. Then Jobs, after being banned from the Lisa team for having a dictatorial behavior and slowing down the project, was told to lead the Macintosh team instead.

Jobs totally re-done the Macintosh’s design to make it closer to an underpowered Lisa concept, at the point of eventually making a disgusted Raskin leave Apple. Through great engineering efforts, the team reached its goal, introducing about the same features as in the Lisa at a quarter of its price. With a memorable ad inspired by Orwell’s “1984″ (With IBM implicitly starring as Big Brothers), with as little non-competitor-bashing content as in its future ads, Apple launched the Macintosh.

The Macintosh included the following :

- The first useless-but-pretty eye-candy : The Macintosh main interface, called the Finder, displayed a pretty 3D-ish picture while starting up, and opening windows was the source of a “zooming” effect.

Zooooooom !!!

- A powerful file browsing interface : Designed to be a cheaper clone of the Lisa, the Macintosh introduced an interface paradigm for browsing files that would be more or less copied by all future GUIs. Disks appear on the desktop when inserted to allow quick access to them, double-click is used to open files and applications, windows can be grabbed and moved, and closed through the use of a button in their title bar. Files and folder can be copied and moved through the use of drag-and-drop, and renamed by clicking on their names. A list view was introduced, too, though it lacked basic things like drag and drop, which made it somewhat useless.

- Semi-multitasking : The Macintosh could not run several applications at the same time. When another application was run, the Finder was hidden to prevent other applications to be run. However, the Finder itself could do multiple things at once. As an example, it introduced several tools that might be run at the same time as any application, including themselves. So the Macintosh was capable of multitasking, but only user applications would be able to actually do it… Fine…

- Pretty but impractical control panel : Trying to make every every single setting fit in a tiny place, Apple managed to do it. But the result, while pretty and reassuring, is somewhat… cluttered ?

Finder tools and the control panel

- Automatic file/application association : Using some filesystem trick, the Macintosh automatically detects which application uses a specific file. The application then only has to be on the computer : no installation process needed.

- Few bundled apps : Aside from Finder accessories, the Macintosh only included a simple word processor, called MacWrite (identical to WinXP’s Wordpad if you need a copy to look at and a drawing application called MacPaint (pixel-perfect identical to the future Microsoft Paint, except for the inclusion of drawing patterns).

MacPaint running at the same time as the calculator, with its patten at the bottom.

- Absolute lack of horsepower : Programming complex applications on the Macintosh was challenging because of its limited memory (the operating system having painfully managed to take only half of it). It had no HDD, so one had to put in the Mac OS floppy disk at boot time, then take it back before inserting any new floppy disk for launching an application.

- Not developer-friendly at all : Along with memory limitations, the Macintosh lacked any kind of development tool more advanced than the combination of an usual text editor and a compiler. Except Bill Gates, few people dared making software for it.

- Limited file system : The Macintosh could only manage 128 files and folder hierarchy was introduced in a primitive way where only one level of folders was supported.

Thanks to its extremely impressive graphical interface for its times, the Macintosh initially sold well, but then sales dropped. It wasn’t even close to the Lisa in terms of failure, but clearly didn’t manage to beat IBM in the consumer market. Part of this is due to its still heavy price facing its relatively low capabilities (monochrome graphics, small screen, lack of developer support…), which was ironically only due to Apple’s CEO trying to make more profits (with normal margins, the machine would have sold at around 2000$). The lack of supported software (with the noticeable exception of Microsoft Word) was often quoted as another reason to the Macintosh’s low sales. Then there was a horrible marketing decision made on both the Lisa and the Macintosh : making it a closed platform, in both senses that only Apple could make Macintosh-compatible computers and that its improvement abilities were extremely limited.

Allegedly, this would make introducing software on the Macintosh easier, since there wouldn’t be a need for various machine ability check, all Macintoshes being the same computer inside. However, this totally fails at considering the possibility that different users might have different needs. Users with high performance needs require hardware that matches computer science’s quick evolutions, which one sole brand with one sole model just can’t do (this was particularly pictured by the problems causes by the lack of HDD on the Macintosh). Conversely, users with low performance needs should not pay for extra hardware if they don’t need it. However, in most of its history, with the noticeable exception of the modern iPod line of products (which is by far, as a strange coincidence, their most successful product), Apple globally kept this way of thinking that all their users are sorts of robots with the same characteristics, needs, and big wallet…

Anyway, Apple managed to make sales steadier again by introducing the LaserWriter, the first reasonably cheap PostScript laser pointer and through the appearance of Aldus PageMaker, making it one of the top platforms for desktop publishing. Through the introduction of the LOGO programming language (a software introduced to teach software development to children where one makes a turtle move around the screen using BASIC-like programs) along with several deals involving lots of moneys and computers, the Macintosh also managed to maintain Apple’s leader position in the education world.

In 1985, Apple got rid of Steve Jobs due to internal arguments linked with its lack of respect to authority other than his one, and Microsoft introduced its own graphical operating system called Windows.

The following years were full of new releases of the Macintosh (addressing its lack of memory, HDD management, and evolutionary capabilities) and of the Apple II, eventually introducing a Macintosh-like graphical interface to it. Apple also introduced the Macintosh Portable around those times. I don’t know if the “laptop” word can still be used for a device weighting over 15 pounds, but I’m sure the “portable” word is totally wrong. Most of its weight came from the fact that it used a lead-acid battery like those you see in cars. The battery lasted 10 hours and a month in sleep, but… well… its slow performance, bulkiness, and lack of backlight weren’t worth the 6500$ price tag, and its sales reflected this simple fact. Later, they tried to make thinner, cheaper, and backlighted computers, the PowerBook line, and got more sales than I may count here.

In meantime, the OS, now called MacOS, was often updated, introducing along with support for new hardware the following interesting characteristics :

- AppleTalk and AppleShare : A non-standard networking system having ease of use as one of its main goals. Through advanced auto-configuration technology, all that was required to add a computer or peripheral to a network was to plug it in, and optionally give it a name. AppleShare introduced various capabilities to AppleTalk networks, like print/web/email servers management and file sharing.

- A proper file system : Through the use of the Hierarchical File System (HFS), the Macintosh finally gained ability to store more files and proper folder handling, along with shortcut support (called aliases on the Mac).

- Cooperative multitasking : First available as an option, then introduced in the main system. Read case study 2 to know why it’s a bad idea, but well… Hardware was not ready for something else yet, and it’s better than no multitasking at all. Contrary to Windows, Mac OS chose to help a little bit applications with the task of multitasking, though, since tweaks were added to the event handling system in order to permit automatic task switching.

- Swapping support : Some times after Windows 3′s release, the Macintosh finally got access to “superior” (meaning slow but stable) bloatware management.

- System extensions : A simple way for third-party developers to add new functions and hardware support to Mac OS, in the form of code injection in the operating system. Insecure, unprotected, and major source of crashes, this feature, similar to Windows’s VXDs, however permitted third-party improvements to the operating system.

- AppleScript : A way for user to write simple, interpreted users programs (called “scripts”) having full access to all system capabilities. Perfect for automating tasks, this feature definitely proved that command line wasn’t the sole paradigm for doing these.

- Various system and UI tweaks and improvements : Balloon tips (cf. tooltips), proper color support and a color interface, TrueType fonts, and better sound support in form of higher-quality playback and more advanced API

Apple’s Mac OS 7, with balloon tips. Still looks very much like the original Mac OS, with some improvements though.

CRASH AND RECOVERY ATTEMPTS

In 1990, as Apple is starting to be less innovating and more shifting towards a “stable” production, including cheaper and more evolution-capable Macs that sell quite well, Microsoft introduces Windows 3, introducing several new innovations, lots of them originating from the Apple world, to the world of cheaper and widely-available-and-used PCs. Apple sales and stock drop. Apple’s CEO recognizes that Microsoft has won, stating that being the best is less important than caring about software developers and introducing an open and feature-rich system.

For a while, Apple tried to get control on the PC market by making the Macintosh PC-compatible through the Star Trek project, with help from Intel and Novell. But the project would be later cancelled, as Apple decides instead to focus on making more powerful computers by switching from Motorola 68000 series processors to the PowerPC architecture from IBM (the processors themselves being still made by Motorola).

Facing a risk of annihilation, Apple tried to introduce the Macintosh computer to the consumer market by adding new product lines with lower price tag and a home-oriented software bundle. However, the difference between models with different pricing was not always properly understood, and introduced confusion as vendors couldn’t explain it either. Apple reputation suffered.

Then Apple tried to introduce new non-computer products : digital cameras (QuickTake). portable CD audio players (PowerCD), speakers (AppleDesign Powered Speakers), video game consoles (Apple Pippin), and TV appliances (Apple Interactive Television Box). Sadly enough, those products came on market a bit too late, and were often underpowered and/or too expensive facing competitors.

Apple had also started to try to reinvent personal computing (again) some times before by beginning work on the Newton software platform, designed for A4-sized tablet computers (already !). But fearing that their new product may compete with the Macintosh, they decided to use it on small, low-powered, non-independent computers, and invented the PDA. The final product included :

- Emphasis on high-level programming languages : Newton introduced NewtonScript, a programming language which was similar in concept to AppleScript (see above) but was of even higher level, had a very different syntax, and was better suited to manage a power-savvy environment and deal with a low amount of memory. High-level programming languages are programming languages where one does not have to care much about the underlying hardware. Characteristics common to all high-level programming languages are :

- A better focus on what the program has to do, and not how it can do it.

- Better portability to new hardware platforms, meaning that less work has to be done in order to make the program run on new hardware

- Worse performance, since the program cannot take account of the hardware’s special abilities or limited capabilities in order to optimize its behavior according to it.

- Hard to learn, impossible to master, and a different kind of complexity in everyday work. Hardware is simple, it can only do a few simple things. Low-level languages reflect this simplicity as they only rely on the few basic concepts that are needed in order to interact with the hardware. On the other hand, high-level programming languages introduce commands and concepts focused on very specific things, meaning that more has to be learned.before being able to do some simple tasks, and that the doc that one has to use together with them is huge compared to that of a low-level programming language. To sum it up : very low-level languages like assembly are complex because they make the developer think like a machine. Very high-level languages like C# or Python are complex because they require the use of a huge manual and memorization of a lot of concepts. The simplest languages lie somewhere in between, and this notion vary from one developer to another..

- Microkernel design : From a hardware point of view, there is an important distinction between kernel-mode applications, which have direct access to core functions of the hardware such as I/O and clock management, and user-mode applications which may basically only do computations and store data in RAM without help from kernel-mode software. Early hardware only supported running kernel-mode software, and hence most applications ran on kernel mode as the user mode made its appearance. However, for stability and security reasons, the part of the software which runs in kernel mode should be as limited as possible. Therefore, newer operating systems, like that of the Newton, try to minimize the part of the software running in kernel mode. This operating system characteristic is called a micro-kernel design.

- Shared data between applications : NewtonOS introduced the concept of “soups”, large databases accessible to all applications. It allowed, as an example, text notes to be easily converted to appointments in relevant apps. It made the whole OS easily extensible, too, so that plugins sometimes could be confused with core system functionalities. At the same times, it still allowed use of private data, of course, though at the beginning private files still required the use of a soup system, before the second release of NewtonOS removed this limitation.

- Handwriting recognition : Apple perceived this feature as one of the key to Newton’s success. However, it proved to be extremely difficult to implement, leading to the well-known low reliability of it on early Newton units.

- Various UI tweaks : Sound feedback to user input, tabs for switching between different documents (ChromeOS, anyone ?), ability to rotate the screen…

- Chaotic development : Making the Newton become a stable and reliable platform was an engineering challenge, and it took several releases of Newton-powered devices to be done. Apple put huge monetary and human resources in the project, and even with that it took about five years to release the first units. The fact that the whole project’s focus was often lost by the team and that several engineers were competing with each other didn’t help.

- Extensive office suite : After all, it’s the core part of any PDA device, but NewtonOS was especially impressive by introducing a word processors with capabilities close to that of desktop ones, a notes-taking application that allowed to write and sketch at the same time, and extensive contacts informations.

A NewtonOS powered PDA

The Newton OS was finally released in 1993. Due to being multiple times delayed and still having imperfect handwriting recognition, the Newton division came too late on business and eventually knew the same fate as previous new divisions of Apple, even though it was a very nice try. What best pictures it is that as of today, dozens of years after production ceased, the Newton still has its fans on the Internet, even though being a monumental commercial failure.

As Apple still didn’t manage to make some benefits, the new CEO decided to allow third-party manufacturers to make computers compatible with Mac OS 7. Then the long-awaited PowerPC processor finally came out and was included in Macs. Sadly, it didn’t work too well either, first because Apple introduced lower build quality in order to reduce costs to the point of selling the first auto-burning laptop (Powerbook 5300), and second because the companies selling Macintosh clones started to be important competitors as they knew better than Apple how to make reasonably-priced computers.

COPLAND

In 1995, Microsoft releases Windows 95, making Mac OS the last desktop computer operating system that uses inefficient cooperative multitasking. At the same time, Apple hasn’t evolved much since MacOS 7.

Basic ideas for a successor to Mac OS 7 come back from the late 80s. “Pink” was introducing long-term features moved out of Mac OS 7 development because they couldn’t be completed in time. As time passed, developers kept adding more and more feature ideas, a phenomenon known as feature creep and knowingly resulting in bloated and buggy or never-shipped operating systems. At some time ported to the IBM PC in a project called “Taligent”, the resulting product eventually became IBM’s sole property, leaving Apple with no modern OS to work on.

At the same time, Mac OS 7 still became more and more unstable, due to excessive use of kernel-mode software, including third-party one (to address its lacking areas). Development of its new successor, Copland, started in 1995. Its main goal was to introduce a new system architecture for Mac OS, initially including :

- Virtualization : Copland had to run Mac OS 7 applications for obvious compatibility reasons. It hence included a big part of System 7, but this part was not directly running on top of the hardware and did not directly accessed it, it was instead running in a “blue box” on top of a micro-kernel operating system.

- Clean PowerPC support : Mac OS 7 did run on top of the PowerPC architecture, however it did so through the use of several hacks (for old parts of the system and applications originally running on the Motorola 68k processors of the first Macintoshes). Copland was supposed to get rid of these hacks, for the sake of increased reliability and performance.

- Incremental development plan : Originally, that was about all Copland was supposed to do. Plans for it didn’t even included support for multitasking or multi-processor hardware. The idea was to introduce the basic microkernel system, with only enough features on it to run Mac OS 7, make it stable, sell it, then gradually add up features to it up to the point where it could totally replace the old OS 7 parts. Though bad from an innovative point of view, as modifying existing software is way harder than making new one, it was best for compatibility reasons.

Then feature creep and focus loss occurred : as more developers began working on Copland, each one introduced new ideas from its former project in it, making the feature list always longer, quicker than one could check it. Testing became more and more difficult because of this, and hence development slowed down. As it slowed down, more features had to be introduced in order to justify the delays. Copland shifted from its initial goal of making a clean and simple base to a new operating system and became some kind of wishlist for Apple engineers where every new technology had to be introduced. As the project became larger, developers started to work in an independent fashion, making the focus loss even more painful.

At some point, Apple had to show a developer preview of Copland. This preview pictured the terrible state of the project more than anything else : it had no coherence, lacked basic features like text editing, and was extremely unstable, often corrupting its own system files and needing to be re-installed. A developer release, some times later, often crashed after doing nothing at all.

Hoping to salvage the situation, Apple’s CEO hired a former collaborator from National Semiconductors, Ellen Hancock, in order to take over engineering and put Copland development back on track. After a while, she decided that the situation was hopeless, and that the best was to buy an existing operating system and put a “blue box” on it. After studying the market, she suggested to buy the company Steve Jobs had made in meantime, NeXT.

Back on its throne, Steve Jobs thanked Hancock by making jokes on her repeatedly and giving her a far reduced quality assurance job, which she quickly left. According to her plan, several new releases of OS 7 were rolled out, only introducing minor features and gradual improvements like background pictures (finally !) and assistants (programs that help the user doing complicated tasks). At some point, Jobs changed the name to OS 8, to forbid clone makers to make Mac-compatible computers (they only had license to make OS 7-compatible computers). Some of Copland’s more advanced technologies were introduced in the next major release of Mac OS, namely Mac OS X.

STEVE JOBS IS BACK

Steve Jobs was back. After peeing on the sidewalk by making several change to the company’s hierarchy, he went back to real business and introduced a new kind of computer, the iMac, released in 1998; announcing at the same time the future launch of a totally new system from Apple, Mac OS X.

The first iMac, the G3 was, for the first time in a while, an interesting desktop computer from Apple, from a hardware point of view.

- It featured new technology, part of which came from the PC world (RAM and hard drive technology), and part of which was new and quite exciting (USB, FireWire, progressively got rid of the fan *without crashing*, and subsequent releases of the iMac introduced AirPort, an ancestor to the Wi-Fi technology that was just as theoretically interesting but practically unreliable).

- It lacked a lot in basic areas such as expandability (none. Oh, no, I forgot, you can add up some RAM), component replacing abilities (poor. If only your HDD is dead, you have to either buy a new iMac or have to pay very high fees to Apple’s customer service), and diskette data storage (none. One has to remember that at the 1998, usb pens did not exist : removable storage included diskettes, ZIP drives (bigger diskettes with 100-250 Mo storage space), and recordable CDs. The iMac only included hardware support for the latter, even though it was clearly the worse solution for everyday storage, being terribly slow and hard to manipulate as writing new data was only possible on expensive CD-RWs and required erasing date on the CD completely first. Jobs justified this by telling that people would use the Internet as a storage medium, a funny joke at the time of 4 KB/s pay-per-hour internet access. Basically, if you wanted to backup or transmit data on an iMac, you had to buy a diskette reader separately).

- In design/power trade-offs, design always won : The iMac went away from the usual “gray cubic computer” to a colored and curved design. As it was a major feature, Jobs would not allow mere engineering talks to go against it. This “feature” is often held responsible for the two preceding lacks, and is officially responsible for the low-powered 88-keys iMac keyboard, as the keyboard had to be no larger than the screen.

- Reasonably priced : This is as strange from an Apple product as good performance and bug-free experience from Microsoft software, and hence has to be mentioned.

The iMac, an interesting mix of design and technology.

Then Apple introduced, along with subsequent releases of the iMac and portable siblings to it keeping its main characteristics (childish look and color included), new releases of Mac OS in the 8 and then the 9 line, made to ease the transition to Mac OS X and adding up some new features too. It included :

- Mac OS X-ready technology : HFS+ file system (much bigger files allowed, Unicode file naming, and other features strangely similar to the NT file system released some times before by Microsoft), new search engine (able to search more quickly and support for looking for other data as files through the use of plug-ins), updating software including auto-update capabilities, the Carbon API for developing applications working on both systems, and modifications to the core operating system so that it could run on top of Mac OS X for application compatibility reasons, in a Copland-like way.

- Multiple user management : Ability to define multiple users with passwords, and user right management to some extent.

- Voice authentication : Mac OS 9 could both identify the phrase spelled and the voice spelling it, for increased security.

- File encryption and password management : The title says it all.

- New multimedia features : QuickTime gets compatible with more video formats, and Apple introduces iTunes, a music player with ability to organize music, and iMovie, a basic video editing software.

- iTools : Various internet-related tools, not included in the system but available as a free download. They include an @mac.com email address, the iCards greeting cards service (bundled with free Apple ads), the iReview collection of reviews of websites and the KidSafe directory of family-friendly websites (with most of the reviewed websites talking about Macs, even though this is not statistically possible if the websites are picked up at random), the HomePage web page publishing service (with Apple ads on top or on bottom), and the iDisk online data storage service (no, no Apple marketting in that one). As one would guess, using it was free of any financial charge for its users, so that most Mac users would quickly start using it and make free publicity to Apple.

MAC OS X

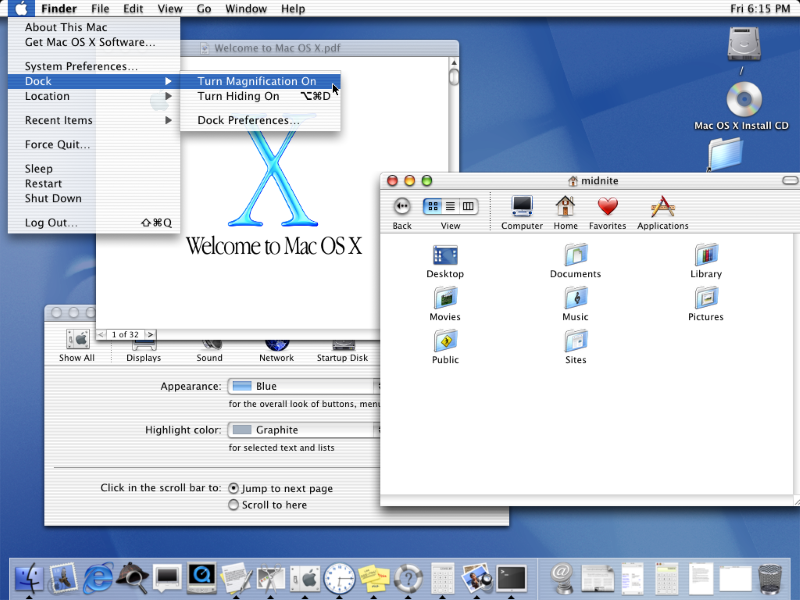

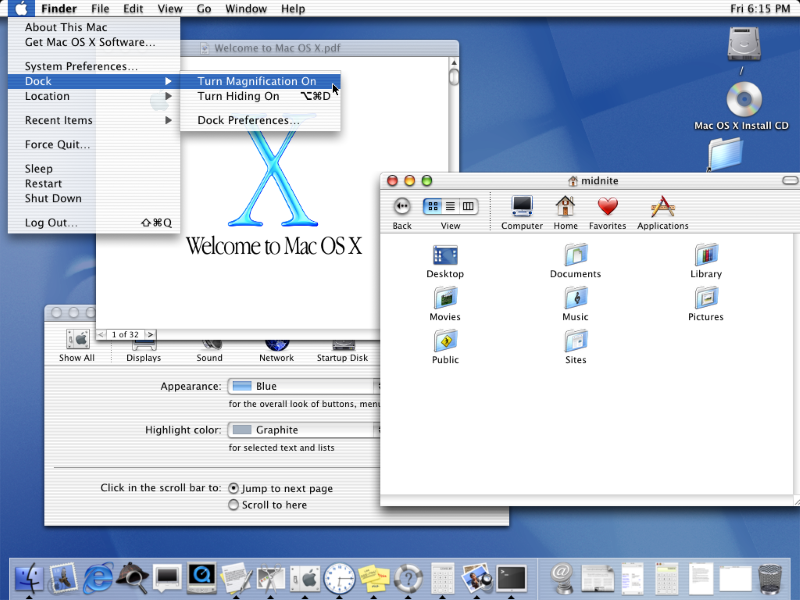

In 2001, Apple introduces Mac OS X, a successor to the old Mac OS operating system. While having some naming similarity, it was very different under the hood. The first version, Mac OS X 10.0 “Cheetah”, included :

- A brand new core : Mac OS X’s core is one of the many flavors of UNIX. If you want to know more about what UNIX is and what it can do, refer to the next article. I’ll focus specifically on Mac OS X-specific features here. Sufficient is to say that OSX noticeably now supports preemptive multitasking and memory protection technologies that other OSs had been using for years, for the sake of increased reliability, stability, and security of future releases.

- Bells and whistles : A lot. To celebrate OSX’s full support of modern graphical hardware through the use of OpenGL technology, Apple introduced a lot of visual effects like translucency in menus and in the Dock along with a sort of vacuum cleaner effect when minimizing windows (most of them being managed by software in the beginning, as most Macs were lacking in the graphics area). OSX also introduced a new general look, shiny and bright, to reflect its modern status.

- The Dock : A new way to manage and launch commonly-used applications. On the left of the dock are icons which represent either applications launchers or launched applications. Clicking on a launcher allows to open the corresponding application, whereas clicking on an launched application’s icon brings it on foreground of the screen. On the right of the dock is the trash, along with tiny pictures representing minimized windows.

- Various tools : Former Mac OSs included third-party email clients. Mac OS X 10.0 introduces Mail, an e-mail application entirely written by Apple, along with an address book (called Address book) and a text editor (TextEdit). Applications now all have PDF output available, meaning that any document can be “printed” to a PDF document if needed. Of course, OSX included a PDF reading app.

- Incomplete network support : Ironically, OSX 10.0 doesn’t support Apple’s proprietary AppleTalk network protocol. As a server, only some internet protocols are supported, too : afp, http, ftp, ssh.

- Lacking feature parity with OS9 : OSX may run OS9 in order to run Mac OS 9 applications. However, there isn’t much OSX applications available, which is logical for an OS which has just been released. However, this goes up to the point that several simple features of Mac OS 9, like DVD playback and data CD burning, are now missing, leading the users to wonder why they should upgrade. Also, as with every new OS again, hardware support was poor.

- Bloat an unreliability : Where Mac OS 9 was somewhat slow on that times’s hardware, OSX 10.0 is far, far worse. Only newer Macs may get to run it, and making it run smoothly is impossible, as the interface still remains very unresponsive. Second, while theoretically cleaner than Mac OS 9, OSX still is young and crashes easily, far more easily than older releases of Mac OS that have years of experience behind them.

OSX 10.0, with its doc and its shiny and translucent effects.

Apple then introduced a minor release, Mac OS X 10.1 “Puma” (yes, they’re all named after big cats), which improved performance, introduced CD burning and DVD playback, improved printer support and improved printer and scanner support by introducing a dedicated application to manage them and a new color management API called ColorSync. This release was the first to be bundled with Mac computers.

In meantime Apple wanted to prove that the Mac is the multimedia platform of tomorrow, and hence releases a portable media player specifically designed for it, the iPod. Sufficient is to say now that it was initially made to work only on Macs (mandatory use of the iTunes music software in order to transfer data, Firewire connectivity, pricing so high that only real Mac users would want to buy it…) but that a PC version was later made so that it was able to sell well, and that it introduced a breakthrough in that business with its extremely good look and ergonomics.

Then they released a new kind of iMac, the iMac G4, which while keeping its older siblings main characteristics included a new look, a freely orientable flat screen, and a much, much higher price tag that led it to never sell very well. A low-end computer, the eMac, was introduced in order to address it lack. It looked like the old iMag G3, and sold way better.

The iMac G4

Then, in 2002, Apple introduced Mac OS X 10.2 “Jaguar”, which included :

- More speed, but only on newer hardware : Jaguar needed more RAM to run, and now displayed all its shiny effects through the use of a graphics card, using the new Quartz Extreme API. For computers meeting both hardware requirement, it ran way smoother than Puma (at the expense of much lower battery life on laptops since the video hardware now had to be turned on constantly). For others, it was even worse.

- CUPS : Apple introduced a brand new, more powerful and reliable printer management system, entirely stolen from the UNIX world.

- Focus on system-wide features : The Finder now had search boxes everywhere, Address Book data was now usable by all applications, and Apple introduced two new system-wide features : Universal Access (features for blind, death, and handicapped peoples) and Inkwell (handwriting recognition).

- Journaling : HFS Plus was now able to keep track of changes made to files before actually making them, so that in case of power shortage or system crash corruption like loss of data was less likely and recovery was easier.

- Better networking : Jaguar introduced network peripheral auto-configuration through the brand new Bonjour software, using the Zeroconf protocol. It also introduced Windows networks support, spam filter in Mail, and official support for web search in Sherlock.

Except for the search boxes in the upper right corner of the file explorer, Jaguar looked essentially the same as previous releases.

In 2003, Apple introduced Mac OS X 10.3 “Panther”, introducing a feature-adding frenzy leading them to claim that the new system had more than 150 new features (even though, as one may guess, most of these are extremely minor features like changing the icon of an application). Panther had the following interesting characteristics :

- New hardware requirements : For masochistic users who would want to try to see OSX sluggish to death on older computer, the sad new is that it won’t work with some G3 computers and all older computers from Apple.

- Massive search engine performance improvements : Through automatic indexation that comes at the price of lowered HDD life and a small performance footprint, search is now fairly instant (it takes one or two seconds on most computers).

- Major Finder revamp : Now with a new “brushed alloy” look with flat buttons that it only shared with some Apple applications at the time (leading to some visual inconsistency), the Finder also introduces a customizable sidebar for access to commonly-used folders, secure deletion of files (as usual deletion leaves the data on disk for a certain time, for speed reasons), native support for Zip compressed files (a stolen hugely popular feature from the recently-released Windows XP), and file labels (ability to change the color of a file’s or a folder’s name, to remember that it’s important).

The new Finder

- Fast user switching : An user may remain logged in while another one logs in.

- Exposé : It’s now possible to display thumbnails of all opened windows at once on the desktop by hitting a keystroke, in order to quickly find one. This is especially important as the Dock and usual switching commands (like Cmd+Tab) do not allow one to cycle through windows, only through applications, which proves to be problematic while using one of the many applications which open a lot of windows.

Apple Exposé, a new interesting way to cycle through windows

- Safari : After using Microsoft Internet Explorer as its main web browser for a long time, Apple introduced a totally new web browser to the OS X platform. Safari is based on work from the UNIX community (again…), namely the Konqueror browser. However, at the time Apple took a look at Konqueror, it wasn’t mature enough to make a decent web browser. Performance, stability and compatibility with most websites were poor, and Apple engineers did an excellent job at making it a stable, compatible, polished product. In exchange, Safari lost all of Konqueror’s “unique” features, like the ability to browse ftp repositories like usual directories : it became a very dull browser, without any special feature facing the technology of most web browser at that time. But a very fast and powerful dull browser : people had to wait the introduction of Google Chrome (2008, based on the web engine of Safari, Webkit, open-sourced according to Konqueror’s licensing terms) in order to see comparable performance on other operating systems.

- X11 : X11 is the standard graphics engine of most UNIXes. Apple ported it to OS X so that UNIX programs could run on OS X with minimum modification, hence introducing a lot of software to Mac OS X in one move. However, the result is far from perfect : performance is horrible, peripheral management is extremely poor, and desktop integration to Mac OS X is close to zero. Whether Apple introduced such a horrible X11 port to the Mac on purpose, in order to make native applications look faster and better or to prevent UNIX applications from competing with them, still is a litigious point. However, the second possibility seems unlikely : why would Apple have introduced X11 at all if they feared such competition ?

- Various other features : Ability to encrypt and decrypt a user’s personal folder on the fly (FileVault), ability for TextEdit to read Microsoft Word files, faster and more complete development tools heavily based on software from other versions of UNIX, Pixlet video codec support, a font manager, an audio and video chat application (iChat AV) that only works efficiently between two Macs, Fax support… Panther was full of minor features and polish.

- .Mac integration : .Mac is the successor to the iTools software discussed earlier. Services are globally the same as before, with the addition of a backup software making use of iDisk and a virus scanner trial. The difference is that people now have to pay for that service, which still includes Apple ads in every published content. In Panther, data can be synced between the computer and .Mac through the use of installed software on the system rather than the usual web interface.

With Panther, Mac OS X became a more interesting choice for computer users : older releases were essentially about introducing and cleaning up the new core technology, but this new release was rather about answering the question “Why should I, as an average user, prefer Mac OS X over other OSs like Windows XP or Mac OS 9 ?”. Instant search, Exposé, Safari, X11, and more powerful and intuitive Finder clearly were interesting answers to this, and Panther received much better user review than previous releases of Mac OS X.

In 2005, Apple introduces two new computers : the Mac mini, an inexpensive (499$) and small computer sold without keyboard, mouse, and screen, as an answer to claims that Apple was not able to make low-end inexpensive computers, and the iMac G5, the new kind of iMac which went even more extreme than previous iMacs by bundling the whole computer in the screen (the trick being to use portable computer components, which have a much higher price/performance ratio but can fit in a tiny space as required). Subsequent releases of the iMac would look this way, only improving performance, so they won’t be discussed here.

The MacMini, an experiment at getting in the low-end computer market

The iMac G5, with a new “where’s the computer gone” ad somewhere around.

GOING INTEL

In 2005, the PowerPC processors on which all Macs are based starts to get weak and is not able to face competition efficiently anymore. In particular, power efficiency is not satisfying at all, as the most powerful Macs (in the PowerMac line of products) need expensive water-cooling technology to work while it just proves to be impossible to build a satisfying laptop (that is, one with good battery life and that does not burn up or burns his user) based on the latest PowerPC processor series.

Apple thus announced that they were going to move to x86 processors from Intel, as they were one of the most common desktop and laptop computers processors manufacturers at the time (along with their competitor, AMD). This attracted some laugh and surprise in the computing world, as Apple had been knowingly making fun of Intel chips for years in their ads (see

http://www.youtube.com/watch?v=e6PoLiXCA40 and

http://www.youtube.com/watch?v=SvvcQpp3SYE as some famous examples).

Making the move to Intel wasn’t very hard on the system side : as an UNIX-like system, Mac OS X was easily portable from one computer architecture to another. The problem was user applications : how to make all those PowerPC-only applications run on Intel processors, and how to make developers write applications for the new Intel processors ?

First Apple introduced Rosetta, a software able to emulate PowerPC code on Intel processors, making one able to run its “old” applications seamlessly at the cost of some performance loss. Then they introduced Universal binaries, a way to make software that would run on both Intel and PowerPC Macs, and introduced automatic building of universal binaries in an update of their developers tools.

All this was brought together in a major upgrade of Mac OS X, namely Mac OS X 10.4 “Tiger”, released in 2005, which included :

- Spotlight : Having managed to make a lightning-fast search engine, Apple went one step further by introducing an intelligent lightning-fast search engine. Spotlight could not only search through file names, but also through file metadata (what kind of file it is, at what time it was created or modified, what is its author, etc…) and sometimes file content (data from most Apple software, MS Office documents, PDF files). Spotlight also uses real-time indexing, meaning that indexing is made as the file is written. This means that search results are now instantly updated, at the expense of pushing further the drawbacks of indexing described when we talked about Panther.

- Dashboard : A software which is able to run a number of small web applications called widgets that may do some simple tasks like doing calculations, following stocks, or translating words. The concept comes from from the then-popular Konfabulator application, which did about the same thing but using XML technology instead.

- Automator : A sort of GUI for AppleScript. It allows to automate various kinds of actions from the system and compatible applications, using AppleScript or pure Cocoa commands.

- VoiceOver : In addition to older accessibility features, OS X is now able to describe what happens on the screen by speaking and receiving spoken commands.

- Dictionary/Thesaurus : The Dictionary application features one. As usual, this feature is integrated in a system-wide fashion, so that all applications may benefit from it if they want one.

- Major API overhaul : In order to support the switch to Intel and newer hardware, along with trying to make developers’s life easier, the API of Mac OS X underwent massive changes, like the introduction of Quartz Composer (yet another new graphics library), AU Lab (a development kit for audio plugins), 64-bit support (for handling larger amounts of data), a file permission management system, early introduction of screen resolution independence, Core Image (another graphics library… this could make some think that OS X is all about look !), Core Data (a structured data management system that provides automatic undo/redo/save options, that are unoptimized as they don’t know what they manipulate but don’t require the lazy developer to write a single line of code), Core Video (another graphics library, but hey ! This time it is about video processing, so it may manage several images !), and Core Audio (a library providing various sound management functions).

- More minor features that may be counted : Some look alterations, access to Spotlight from the desktop by using the icon on the upper right corner of the screen, menus in the Dock, a function plotting application, various improvements to the developer tools, integration of the now infamous H.264/AVC video codec, .Mac use simplification, spotlight integration in Mail, early support for parental controls, RSS/Atom support in safari (as even IE started to have this feature, it was about time), improvement of iChat’s capabilities…

- iBloat : The unavoidable counterpart to adding lots of features : two years before Windows Vista, showing dramatic advance, Tiger was the first major release of a consumer operating system to forcefully introduce 1 GB memory requirements, double HDD requirements facing the preceding release, and the need of a DVD to store the operating system.

In 2007, Apple released its answer to Windows Vista, namely Mac OS X 10.5 “Leopard”, which was about introducing a lot of new features, in order to make the proud Apple user know that the brand is still able to innovate, facing the failure of Microsoft in this area. Leopard included :

- Automator maturation : Now a lot easier to use, allowing to “record” a user action, and featuring a lot of new actions like RSS feeds management, video camera snapshot, PDF manipulation, and so on, Automator starts moving from nerd-only software to software actually usable by anyone…

- Boot Camp : Since Intel Macs are more or less PCs with a shining apple on them, running PC software on it becomes imaginable. In order to attract PC users wishing to switch to the Mac platform but lacking some of their power-hungry Windows software that virtualization can’t make run efficiently like games or professional applications, Apple makes it easy to install Windows on a Mac, side by side with Mac OS.

- Dashboard maturation : It’s now possible to create widgets from web pages using the “Web clip” feature of Safari, and Apple introduced development tools for Dashboard, called Dashcode.

- Quick Look : Quick Look is a new feature that allows one to quickly see what’s inside a usual file without fully opening it through a dedicated application, like Windows XP’s picture-related features (that are also supported by now).

- iChat maturation : With XMPP support, multiple logins, invisibility, animated icons, and tabs, iChat finally reaches feature parity with most instant messaging clients. Through heavily integration to other OS features, it goes one step further : multimedia data from almost all Apple applications may be easily shown in a video chat, along with other Quicklook-compatible data. Screen sharing is introduced, too, in order to make support somewhat easier.

- Stacks : Putting a folder in the Dock has been an option for a long time. Clicking on it used to open a menu, with all the content of the folder displayed inside of it. However, this is no longer the case in Leopard : folder operation is now done through the use of Stacks, which are basically a different way to display what’s inside, making use of bigger icons. Stacks have been heavily criticized in early releases of Leopard for only focusing on look and resulting in reduced usability. Some enhancement have been made in further releases of Leopard that addressed most of those critics.

One of the multiple ways a Stack can be shown.

- Major UI tweaking contest : A lot of things were slightly altered in this area, for better and worse.

- The menu bar is now slightly pink and transparent, to the point that text on it was barely readable when using certain backgrounds, before Apple corrected this in a patch sometimes later.

- The Dock gets a 3D look with shiny reflections all around, at the expense of making it more difficult to understand which applications are running and which applications aren’t since this is now only shown through the use of a very faint blue spot under the icon, instead of a very visible arrow as before.

- Some applications (like TimeMachine and the Firewall) experience settings amputation : all useful settings are gone, there’s only a big ON/OFF button left, which somewhat reminds of the iPhone.

- The Finder now features “cover flow” for quickly browsing through pictures, but enabling it once makes it enabled in all Finder windows, even those without pictures, making this feature somewhat clunky. Speaking of the Finder, quick access to folders on the left now features folders auto-generated through search, but the list is now much smaller and harder to click, making it somewhat less useful.

- An unified gray theme for windows is finally introduced, after years of waiting, but there’s now a lot of inconsistent different kinds of buttons, including “capsule” ones, that somewhat remind of Dr. Mario but sadly do not change color when they are disabled, making it hard to know quickly which buttons are enabled and which buttons aren’t.

Luigi, come here and tell me which button is enabled, quick !

- Spaces : Yet another feature stolen from the UNIX world. Spaces allow people who often use lots of windows to put them on several “virtual” desktop and switch easily from one to another, instead of having all of them stacked up on an usual desktop…

Spaces, the most blatant rip-off ever sold as a new feature.

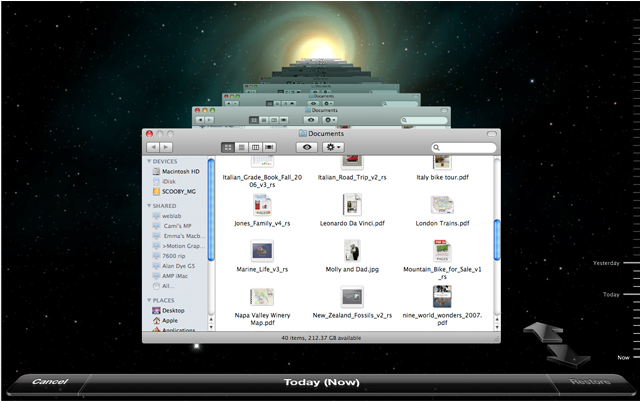

- Time Machine : Time Machine brings automatic backups to the Mac platform, in a very cool way (see following screenshot). However, for the sake of simplicity, this feature has been brutally murdered, as said above, up to the point that using it becomes ironically harder that using third-party software in some areas. As an example, Time Machine requires a whole disk drive to be used solely for backup purposes, and it does not work on wifi-powered disk drives, except Apple’s Time Capsule. Also, TimeMachine tries to do updates at regular time intervals but does not remind the user to do it if the disk is not present, which makes it poorly suited for laptop computers. But well, the interface for “going back in time” is so classy with its 3D navigation between backups and its timeline on the right…

Backup, the cool but crippled way.

- More or less efficient security features : Leopard included several new security-related features, like random placement of libraries in memory (to prevent return to libc-like attacks that occur due to poorly written code), a new firewall (that does not watch traffic from some Apple applications and system-level applications), Sandboxing ie more fine-grained application permission control than through a “allow everything or user rights only” choice (but only for applications from Apple supporting it), applications signing, and a guest account. These features have been widely criticized for being fairly ineffective, only slightly improving, or even reducing system security (like with the firewall being now disabled by default).

- Lots of minor improvements : After all, Apple had to make the user believe about the “Over 300 new features” statement in ads… The dictionary does now support Wikipedia and Japanese, iCal and Mail are better integrated with each other and iCal gets better tools for group work, while Mail does now support RSS feeds and various types of notes, Parental Control does now allow control over internet access, and so on… Various tweaks and updates were also introduced in the developer world, but including nothing very new except the 2.0 release of Objective C (the programming language from Apple), and the Core Animation framework for making shiny animations eating processing power only for the sake of looking good in only a few lines of code.

- Even more bloat : While not reaching Vista Ultimate’s infamous 13GB HDD requirement, OSX Leopard gets honorable mention with a minimum 9 GB requirement, with around 12 GB being needed in order to fully install all features, and comes on 2 DVDs like its Windows competitor. Well, yes, you’ll need dozens of gigabytes of data and 2 GB of RAM in order to manage files, browse the web, and play your music. That’s the way most recent software works, and if you want something to blame, you may think about feature creep, especially since Apple makes it a selling point…

- MPAA-compatible : As Apple are friends of big companies in the video industry, which made them push forward the $5.000.000/year patent-encumbered H.264/AVC codec in Quicktime and on their site, they went one step further in friendship with the devil by making it pretty hard to take screenshots from DVD (as on Windows, it’s not impossible), and introduced garbled video when reading films using a VCR or TV set as the output.

- .Mac getting invasive : Remote desktop access is coming to the Mac through the “Back to my Mac” feature… But only for .Mac users ! This makes OS X the first platform making user pay for such a feature. Advertising for .Mac is also displayed at first boot of the OS and in some places of the system, to make you know that you really should buy it. As a compensation .Mac now features a brand new photo and video gallery (with, you guessed it, Apple marketing).

Mac OS X Leopard was the first release of Mac OS X in a while to draw some strong criticism from the Mac community. Ironically, it underwent the same criticism as Vista : bloated, badly polished, a far from perfect UI focusing on look rather than usability, and invasive features.

Even more funny for a company who wrote “Redmond, start your photocopiers” in their ads, Apple took the same approach as Microsoft facing these mixed review and angry users, too : they patched some bugs, and made the next release less hardware-hungry and more polished, but didn’t address a single bad thing in the deep. It just went as well, as the new release of 2009, Mac OS 10.6 “Snow Leopard”, led to as positive reviews as Windows 7. It included the following

- Spring cleanup release : This release dropped old PowerPC code, in order to improve performance on newer hardware. Support for old hardware, noticeably printers, has been also dropped, with drivers now only available to computers connected to the internet. As a result, the operating system gets leaner, weighting “only” around 5 GB.

- 64-bit : Most applications were ported to 64-bit code, so that they may take fully advantages of newer processors (bigger number manipulation, new registers for quicker data access, managing more RAM for use together with big data and bloated applications…). Using 64-bit instructions is now the preferred way of doing things in Mac OS X, which probably prepares a full switch to 64-bit dropping 32-bit compatibility some times later.

- Grand Central Dispatch : As processors manufacturers started to experience difficulties at the task of building speedy computers, their answer to the problem was the following : instead of executing a single set of instructions faster and faster, it’s better to execute multiple sets of instructions at the same time, on partially separated instruction execution units. However, this approach raises several issues, as it requires to make many changes to the way of programming applications, for the sake of slicing them into smaller units of code. Grand Central is a new part of the operating system made to help people code more efficiently on multi-core computers by giving part of the work to the operating system. It also allows Objective C programmers to explicitly declare some parts of their programs as separate blocks, that will be managed separately by the operating system in terms of cores.

- OpenCL : Graphic cards have an insane amount of processing power, which is precisely why people use them for hungry graphics tasks instead of using the central processor. To do their job better, they’re internally made to deal with a three-dimension space and with big arrays of data (uncompressed images). However, lately, some people realized that this is exactly what most scientific computation based on matrices and vectors require. A new trend in the computing world is to use the graphic card for other kinds of computations than rendering and displaying pretty pictures. OpenCL is one of the technical approaches to this problem, and it is the one that Apple chose.

- QuickTime X : QuickTime, the media infrastructure of Mac OS, has been undergoing a heavy rewrite in Snow Leopard, in order to take advantages of new OS technologies and hardware capabilities. As such, it is not compatible with decoding plug-ins made for previous releases, which means that it was not compatible with as much media codecs at launch time. In order to reach feature parity, an older release of QuickTime is bundled with Snow Leopard and works in parallel with QuickTime X, addressing its lacking areas until it is ready to fully replace it.

- A lot of polish : There’s no new feature or major UI change in this release, however existing features have undergo various changes, feeling snappier and often more complete and efficient. This includes Boot Camp now allowing Windows to read and write from and to the Mac OS file system, iChat requiring lower bandwidth while allowing higher video resolution on high-bandwidth connections, Microsoft Exchange support in office applications, improved multi-touch support, ability for Preview to select text from multi-columns PDFs separately, Safari stealing some new features from popular web browsers (but wait, the speed dial feature they’ve stolen from opera and chrome includes a 3D shiny look, making it harder to distinguish details on webpages thumbnails, isn’t that awesome ?), VoiceOver being more smart and efficient, and various minor UI tweaks.

CONCLUSION

Finally, I reach the end of this never-ending article… One thing that can’t be said about Apple is that they did nothing to the computing world. But what can be said globally about this company ? Apple is an innovative brand, rather nicely following user’s need, and they’ve got a lot of skilled engineers as needed in order to support their ideas. Their product are usually well-polished, but often suffer extensive feature creep (as an example, navigating in the Applications folder of a fresh install of Mac OS X Leopard is a nightmare). New features are endlessly added up, but almost never removed. As a result, OS X is nowadays a bloated OS, just as bloated and power-savvy as Windows but with the difference that one may see it on Windows because it is often installed on older machines, whereas OS X is only installed on new Mac hardware.

This can still be seen, though, as with Windows, by noticing that newer Mac hardware boots up at about the same speed as older ones, despite using hardware that runs hundreds to thousands of times faster. Speaking of Mac hardware, there is another thing worth noticing about Apple, which is their darker side : Apple jails their users. They do so through…

- The “Mac-only” limitation : It is explicitly written in Mac OS X licensing terms that you may only install it on Mac hardware, and several people have been dragged in court for violating that rule. Apple fans often miss the point of this by telling “No, no, it’s not a bug, it’s a feature : as Apple controls the hardware on which OS X runs, they may make more optimized software and user experience than Microsoft”. The problem is not the bundling of OS X with Macs nor its limited hardware support, it’s that one may ONLY use Mac OS X together with a Mac. No matter how cool or fun Apple are, they are jerks when they force users to buy their overpriced hardware if they don’t want to. Apple hardware is made in order to meet specific user needs, it cannot address everyone’s (one may, as an example, think of the Macbooks’s multi-touch trackpads that often mistake scrolling gestures for zooming gestures), it’s like only selling 6-feet-long beds because the CEO isn’t any taller and does not have children.

- Proprietary software and technology : To be fair, they share this point with Microsoft. Introducing new bright and shiny tech is nice, but there is a problem when one isn’t compatible with other common and standard technologies in the world, implicitly forcing use of their product. OS X is full of this, for example with desktop sharing bundled with iChat and extensive .Mac propaganda. Another funny example is the lack of serious freewares on the Mac because of the close to zero X11 performance and FreeDesktop compatibility while most features are only accessible through the use of proprietary libraries, forcing to explicitly develop software for the Mac while other operating systems allow one to develop software for all computers at once.

To better picture the danger Apple represents, let’s talk about another operating system they introduced in the mobile devices world, iPhoneOS. It brings the following dirty things around…

- iTunes-only : iTunes is the only legal way to move data in and out of an iPhone, including for backup purposes. Not all data may be transferred, as one would guess : application-specific data, as an example, can’t be backed up. This noticeably allowed a security flaw, namely mails not being really deleted from the phone when the user deleted them, to go unnoticed. The need to use iTunes also means that if Apple chooses not to support iTunes on the Windows platform anymore, all iPhone users will be forced to buy Macs in order to get full functionality from their devices.

- App store : There’s nothing wrong with the idea of having a lot of custom applications downloadable from a manufacturer-provided website. However, when one may only download applications this way, things clearly have gone way too far. Apple uses this in order to impose marketing choices : any mentions of competing products are banned from the iPhone, and free alternatives to pay-for applications disappear mysteriously. Flash and Java technologies, a very popular way of introducing little programs on web pages, are also taken away from the iPhone, so that applications are always developed in an iPhone-specific way and can’t benefit competitor. And, again, to protect paid-for applications from Apple.

- Multitasking for system apps only : Some will say that this is only an usability choice, and not a dictatorial one. I ask anyone thinking this way to think twice about the following : why introduce it in system apps with notifications, in that case ? Do you really feel confused when you use multiple applications at once on a computer ? And would people really buy music for their iPhone on iTunes if they could freely listen to it through Pandora or Jiwa ?

The iPhone is a very interesting and innovative phone. Its iPad sibling could have been a good product if it was equipped with a proper OS for its size. But their OS is a real life experiment on how far a dictatorship may get in the operating system world… And it pictures how much Apple must be approached with caution.

Apple, again, are skilled, there’s nothing wrong with the build quality of their product (except excessive feature bloat) but their philosophy is dangerous, and for that reason I wouldn’t recommend any without prior explanation of the danger behind using Apple products and feel like this brand has to be deleted from the computer world if their behavior doesn’t change.

FINAL WARNING

A lot of Apple users are very touchy about criticism. Would anybody try to introduce the concept of innovation brought by Microsoft on a Mac forum, as an example, this would quickly start a flame war. Would anybody emit the opinion that the iPod is flawed by design through its iTunes-only philosophy, he or she would be the target of the next flame war. So-called Apple-lovers also follow Apple’s tendency to re-invent the story of computer science in order to make Apple look like angels, responsible of any good thing and having never, ever, failed at something.

As Apple gained popularity with their iThings recently, this behavior led in turn to the appearance of Apple-haters, which on the other hand try to minimize Apple’s influence on the computing world, basically telling that they only re-invented shit and that anyone stupid enough to buy Apple hardware doesn’t deserve consideration as a human being.

Globally, Apple is a brand that one hates or loves. I tried my best to remain neutral (not in encyclopedic sense, this is a personal blog, but in sense that I get good points from both sides), but I had to get information from somewhere nonetheless, and when talking about Apple, flawed sources of information are common. Would you notice any mistaken information somewhere, feel free to comment !

CREDITS

French : http://fr.wikipedia.org/wiki/Apple,

http://fr.wikipedia.org/wiki/Apple_II and

http://www.aventure-apple.com/chrono,

http://fr.wikipedia.org/wiki/IPod

English : http://en.wikipedia.org/wiki/Apple_III,

http://toastytech.com/guis/lisaos1LisaTour.html,

Apple Inc. – Wikipedia, the free encyclopedia,

Macintosh – Wikipédia,

http://en.wikipedia.org/wiki/History_of_Mac_OS,

http://en.wikipedia.org/wiki/Apple_Computer,_Inc._v._Microsoft_Corporation,

http://en.wikipedia.org/wiki/Newton_(platform),

http://lowendmac.com/orchard/06/john-sculley-newton-origin.html,

http://en.wikipedia.org/wiki/Copland_(operating_system),

http://en.wikipedia.org/wiki/Ellen_Hancock,

http://toastytech.com/guis/macos81.html,

http://toastytech.com/guis/macos9.html,

http://en.wikipedia.org/wiki/Mac_OS_X_10.0,

http://en.wikipedia.org/wiki/Mac_OS_X_v10.1,

http://en.wikipedia.org/wiki/Mac_OS_X_v10.2,

http://en.wikipedia.org/wiki/Mac_OS_X_v10.3,

http://en.wikipedia.org/wiki/Mac_OS_X_v10.4,

http://en.wikipedia.org/wiki/.Mac#History,

http://en.wikipedia.org/wiki/Mac_OS_X_Leopard,

http://en.wikipedia.org/wiki/Mac_OS_X_v10.6,

http://www.thinkmac.co.uk/blog/2007/10/leopard-stupidity.html,

http://toastytech.com/guis/osx15.html

The mouse click on the button is sent in the form of a cryptic message (1) to a process called the mouse driver, which decodes it and interprets it as a click. A higher level of abstraction may be required, especially if several mouses or a multi-touch screen are in use, through a “pointer manager” to which the click is redirected (2). Whether this happens or not, a process managing the UI of all programs is finally informed (3) that a click occurred at a given position of the screen. Using some internal logic, it determines which program is concerned by the click, and that a button was clicked. It then finds in which way the program wanted to be informed that someone clicked on this button, and informs it as required (4). The process execute the planned instruction, hiding the button. But it can’t do it all by itself, it tells the UI manager process to do so (5). The UI manager determines which effect hiding the button will have on the window of the program (showing what’s behind), and tells a drawing process to display said effect on the screen (6). The drawing process turns what the UI manager said in simple, generic graphic instructions (e.g. OpenGL commands) that are transmitted to a graphics driver (7), whose job is to communicate with the graphic hardware. This driver turns the hardware-independent instructions into model-specific cryptic instructions, that are then sent to the hardware so that the screen look changes in order to reflect the disappearance of the button (8).As we can see, even the simplest GUI manipulations involve an important amount of work. This emphasizes the importance of using isolated and independent programs : a single program doing all this would be huge, which is a synonym for slow, buggy, and hardly modifiable. We also see that our multi-programs design will require, for performance reasons, that communication between processes was fast.